London-based Microsoft-backed artificial intelligence company Builder.ai valued at $1.5 billion has filed for bankruptcy after it was revealed that its much-touted ‘neural network’ was actually a workforce of more than 700 engineers based in India. But this company was not the first – or only – or biggest – company to do so. Amazon and others did exactly that and got away with it. Read on to find out more.

Imagine that Siri or Alexa is actually a lady with a headset sitting at a call centre in Bangalore, faking an accent and answering your queries in a real time with a slightly seductive voice. That’s not what is really happening (I hope) but just imagine that for a second. And that’s what Builder.ai was – an AI ‘assistant’ that’s really hundreds of coders in a BPO office somewhere in India.

The thing is, several high-profile companies have marketed their products as powered by advanced AI or automation, only for it to be revealed that much of the work was actually performed by humans, often in remote or lower-wage locations.

Here are notable examples:

Amazon

Ah, the things this company will do to make you spend more money.

Amazon’s “Just Walk Out” technology — used in Amazon Go stores — was advertised as a highly automated system using computer vision, sensor fusion, and deep learning to detect what items customers took and then automatically charge them without checkout.

However, reporting in 2024 (notably by Business Insider) revealed that:

- The system relied heavily on human reviewers, particularly offshore workers in India, to manually review video footage and help determine what customers were taking.

- In 2022, as many as 1,000 workers were involved in this manual labeling and verification process.

- Every transaction was reportedly reviewed by humans, contradicting the impression that it was entirely AI-driven.

- These human workers helped train the AI models but also intervened live or post-fact when the AI struggled to make confident decisions.

As of early 2024, Amazon began phasing out Just Walk Out in favor of smart shopping carts in many stores, which offer a more transparent and hybrid approach to automation.

x.ai

Fun fact: I tried posting this story on my X account but X just wouldn’t let me. I mean, Twitter wouldn’t let me post things that are critical of Twitter. Hehe.

X.ai aimed to create a fully automated scheduling assistant, with its AI agents “Amy” and “Andrew” handling meeting arrangements without human intervention.

However, achieving this level of automation required substantial human input:

- Data Annotation and Training: To train its AI models, X.ai employed approximately 105 human “trainers” in the Philippines. These individuals were responsible for labeling data, such as identifying time zones and parsing natural language in emails, to improve the AI’s understanding and accuracy.

- Handling Complex Scenarios: While the AI handled routine scheduling tasks, complex or ambiguous situations sometimes necessitated human assistance. In such cases, human workers would step in to ensure accurate scheduling.

While X.ai’s vision was to provide a fully autonomous scheduling assistant, the reality involved significant human labor, especially in training the AI and managing complex scheduling scenarios. This underscores the challenges in creating AI systems capable of handling the intricacies of human communication without human support.

Unlike X.ai, some competitors like Clara Labs adopted a “human-in-the-loop” approach from the outset, combining AI with human oversight to manage scheduling tasks. This strategy nicely acknowledged the limitations of AI in understanding nuanced human communication and ensured higher accuracy in scheduling. This post is NOT sponsored by Clara Labs – or anyone at all.

DoNotPay

DoNotPay advertised itself as your “AI Lawyer”. That itself should have rung alarm bells.

In September 2024, the FTC charged DoNotPay with deceptive marketing practices, stating that the company falsely advertised its AI chatbot as a substitute for human legal services without adequate substantiation. The FTC found that DoNotPay had not tested whether its AI operated at the level of a human lawyer and had not employed attorneys to assess the quality and accuracy of its legal features.

As part of a settlement, DoNotPay agreed to pay $193,000 in monetary relief and to notify consumers who subscribed to the service between 2021 and 2023 about the limitations of its legal offerings. The company is also prohibited from advertising that its service performs like a real lawyer unless it has sufficient evidence to back up such claims.

DoNotPay itself used human lawyers. 🤷🏽♂️

This action was part of the FTC’s broader initiative, “Operation AI Comply,” aimed at cracking down on deceptive AI claims and schemes. Fantastic initiative, if you ask me.

Rytr “AI Writing Assistant”

Rytr, which claimed to be an AI-powered writing assistant, was also cited by the FTC for deceptive marketing. The agency found that the company’s output often involved human input or editing, contradicting its claims of full automation.

Rytr utilizes advanced language models, such as GPT-3, which are trained on vast datasets curated and annotated by human experts. This human involvement is crucial for teaching the AI to understand context, grammar, and nuances in language.

In real-world applications, Rytr serves as an assistant rather than a replacement for human writers (to be fair, they are calling themselves assistants). Users often need to:

- Provide Clear Prompts: The quality of Rytr’s output heavily depends on the clarity and specificity of user inputs.

- Edit and Refine Output: While Rytr can generate coherent content, it may lack the depth, creativity, or contextual understanding that human writers possess. Therefore, human editing is often necessary to polish the content.

- Ensure Accuracy and Originality: Rytr includes tools like plagiarism checkers, but human judgment is essential to verify the accuracy and originality of the content, especially for specialized or sensitive topics.

In September 2024, the U.S. Federal Trade Commission (FTC) took action against Rytr for offering a feature that allowed users to generate fake product reviews. The FTC stated that some subscribers used this tool to create thousands of reviews with minimal user input, which could mislead consumers. As a result, Rytr agreed to discontinue its review generation services. However, the company did not admit to any wrongdoing.

While Rytr may be a powerful AI writing assistant (I wouldn’t know because I don’t use AI writing assistants), it functions best as a collaborative tool that enhances human creativity and efficiency. Human input remains essential for guiding the AI, refining its output, and ensuring ethical use of the content generated.

Tesla

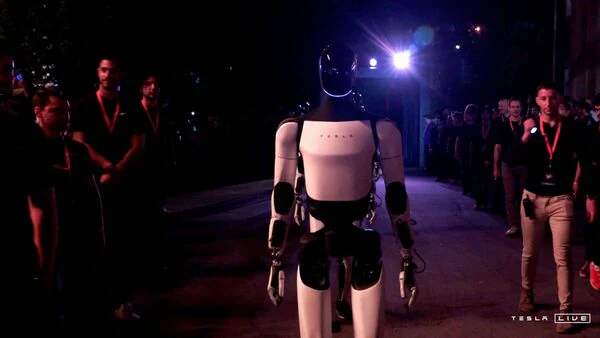

Tesla’s Optimus robots. And arguably, their autonomous driving tech.

I love telling the Elon fanboys that their digital God lied about a lot of things, including that their ‘autonomous’ robots were remotely controlled by humans. Hehe.

It’s All For a Few Dollars More

These cases illustrate a recurring pattern: companies leveraging the hype around AI and automation to attract investment and customers, while quietly relying on human labor, often in less visible parts of the world.

Why do they do it? Because ethics mean nothing to these companies if they can get a few more dollars (or rupees in our case) and they’ll happily ride the AI / Automation hype to do it.

So the next time you see or hear some CEO say ‘AI Powered’ or ‘Fully Automated’, be suspicious.

Bonus – gotta love this thread. AI got a new meaning now.

Thanks for reading! Share this with three friends – you can try using AI to share it for you. I write at least twice a week but if you want insight like this straight into your inbox, check out my Substack. And follow me on the social media platform of your choice!